Thirty years ago, Kodak dominated the business of making photographs. It made cameras, sold film, and employed 140,000 people. Now Instagram handles many more images than Kodak ever did, but when it was sold to Facebook in 2012, it employed 13 people. This striking comparison was made by Jaron Lanier in his book “You are not a gadget”, to stress the transition we have made to world in which value is increasingly created, not from plant and factories and manufacturing equipment, but from software, from brands, from business processes – in short, from intangibles. The rise of the intangible economy is the theme of a new book by Jonathan Haskel and Stian Westlake, “Capitalism without Capital”. This is a marvellously clear exposition of what makes investment in intangibles different from investment in the physical capital of plant and factories.

These differences are summed up in a snappily alliterative four S’s. Intangible assets are scalable: having developed a slick business process for selling over-priced coffee, Starbucks could very rapidly expand all over the world. The costs of developing intangible assets are sunk – having spent a lot of money building a brand, if the business doesn’t work out it’s much more difficult to recover much of those costs than it would be to sell a fleet of vans. And intangibles have spillovers – despite best efforts to protect intellectual property and keep the results secret, the new knowledge developed in a company’s research programme inevitably leaks out, benefitting other companies and society at large in ways that the originating firm can’t benefit from. And intangibles demonstrate synergies – the value of many ideas together is usually greater – often very much greater – than the sum of the parts.

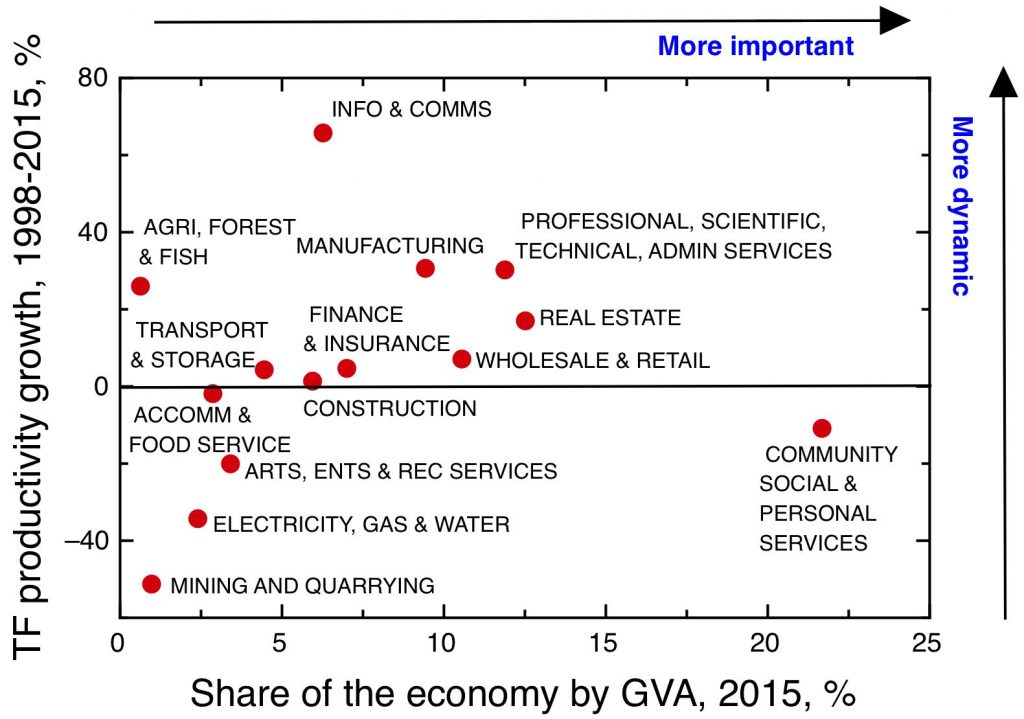

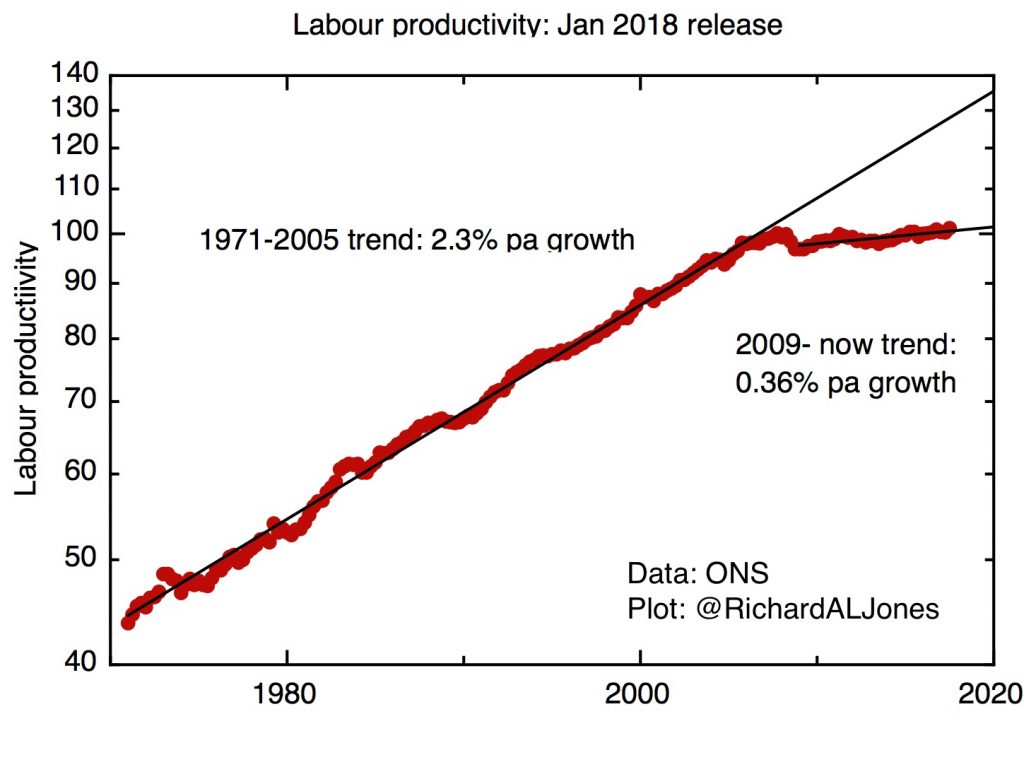

These characteristics are a challenge to our conventional way of thinking about how economies work. Haskel and Westlake convincingly argue that these new characteristics could help explain some puzzling and unsatisfactory characteristics of our economy now – the stagnation we’re seeing in productivity growth, and the growth of inequality.

But how has this situation arisen? To what extent is the growth of the intangible economy inevitable, and how much arises from political and economic choices our society has made?

Let’s return to the comparison between Kodak and Instagram that Jaron Lanier makes – a comparison which I think is fundamentally flawed. The impact of mass digitisation of images is obvious to everyone who has a smartphone. But just because the images are digital, doesn’t mean they don’t need physical substrates, to capture the images, store and display them. Instagram may be a company based entirely on intangible assets, but it couldn’t exist without a massive material base. The smartphones themselves are physical artefacts of enormous sophistication, the product of supply chains of great complexity, with materials and components being made in many factories, that themselves use much expensive, sophisticated and very physical plant. Any while we might think of the “cloud” as some disembodied place where the photographs live, the cloud is, as someone said, just someone else’s computer – or more accurately, someone else’s giant, energy-hogging, server farm.

Much of the intangible economy only has value inasmuch as it is embodied in physical products. This, of course, has always been true. The price of an expensive clock made by an 18th century craftsman embodied the skill and knowledge of the craftsmen who made it, itself built up through their investments in learning the trade, the networks of expertise in which so much tacit knowledge was embedded, the value of the brand that the maker had built up. So what’s changed? We still live in a material world, and these intangible investments, important as they are, are still largely realised in physical objects.

It seems to me that the key difference isn’t so much that an intangible economy has grown in place of a material economy, it’s that we’ve moved to a situation in which the relative contributions of the material and the intangible have become much more separable. Airbnb isn’t an entirely ethereal operation; you might book your night away though a slick app, but it’s still bricks and mortar that you stay in. The difference between Airbnb and a hotel chain lies in the way ownership and management of the accommodation is separated from the booking and rating systems. How much this unbundling is inevitable, and how much is the result of political choices? This is the crucial question we need to answer if we are to design policies that will allow our economies and societies to flourish in this new environment.

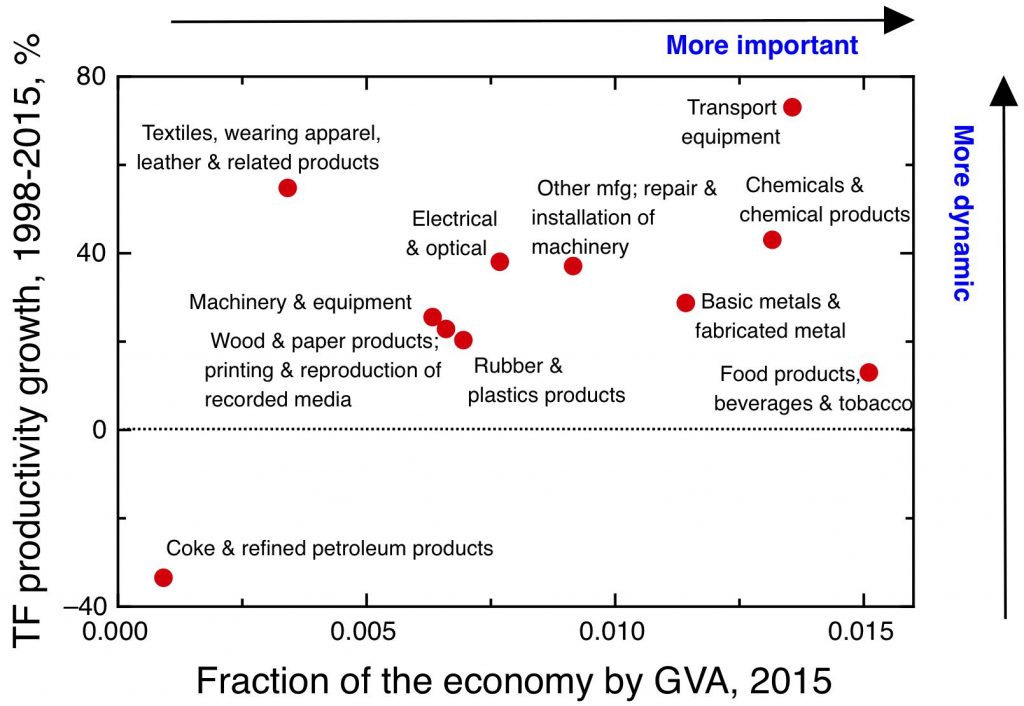

These questions are dealt with early on in Haskel and Westlake’s book, but I think they deserve more analysis. One factor that Haskel and Westlake correctly point to is simply the continuing decrease in the cost of material stuff as a result of material innovation. This inevitably increases the relative value of services – delivered by humans – relative to material goods, a trend known as Baumol’s cost disease (a very unfortunate and misleading name, as I’ve discussed elsewhere). I think this has to be right, and it surely is an irreversible continuing trend.

But two other factors seem important too – both discussed by Haskel and Westlake, but without drawing out their full implications. One is the way the ICT industry has evolved, in a way that emphasises commodification of components and open standards. This has almost certainly been beneficial, and without it the platform companies that have depended on this huge material base would not have been able to arise and thrive in the same way. Was it inevitable that things turned out this way? I’m not sure, and it’s not obvious to me that if or when a new wave of ICT innovation arises (Majorana fermion based quantum computing, maybe?), to restart the now stuttering growth of computing power, this would unfold in the same way.

The other is the post-1980s business trend to “unbundling the corporation”. We’ve seen a systematic process by which the large, vertically integrated, corporations of the post-war period have outsourced and contracted out many of their functions. This process has been important in making intangible investments visible – in the days of the corporation, many activities (organisational development, staff training, brand building, R&D) were carried out within the firm, essentially outside the market economy – their contributions to the balance sheet being recognised only in that giant accounting fudge factor/balancing item, “goodwill”. As these functions become outsourced, they produce new, highly visible enterprises that specialise entirely in these intangible investments – management consultants, design houses, brand consultants and the like.

This process became supercharged as a result of the wave of globalisation we have just been through. The idea that one could unbundle the intangible and the material has developed in a context where manufacturing, also, could be outsourced to low-cost countries – particularly China. Companies now can do the market research and design to make a new product, outsource its manufacture, and then market it back in the UK. In this way the parts of the value of the product ascribed to the design and marketing can be separated from the value added by manufacturing. I’d argue that this has been a powerful driver of the intangible economy, as we’ve seen it in the developed world. But it may well be a transient.

On the one hand, the advantages of low-cost labour that drove the wave of manufacturing outsourcing will be eroded, both by a tightening labour market in far Eastern economies as they become more prosperous, and by a relative decline in the importance in the contribution of labour to the cost of manufacturing as automation proceeds. On the other hand, the natural tendency of those doing the manufacturing is to attempt to move to capture more of the value by doing their own design and marketing. In smartphones, for example, this road has already been travelled by Korean manufacturer Samsung, and we see Chinese companies like Xiami rapidly moving in the same direction, potentially eroding the margins of that champion of the intangible economy, Apple.

One key driver that might reverse the separation of the material from the intangible is the realisation that this unbundling comes with a cost. The importance of transaction costs in Coase’s theory of the firm is highlighted in Haskel and Westlake’s book, in a very interesting chapter which considers the best form of organisation for a firm operating in the intangible economy. Some argue that a lowering of transaction costs through the application of IT renders the firm more or less redundant, and we should, and will, move to a world where everyone is an independent entrepreneur, contracting out their skills to the highest bidder. As Haskel and Westlake point out, this hasn’t happened; organisations are still important, even in the intangible economy, and organisations need management, though the types of organisation and styles of managements that work best may have evolved. And, power matters, and big organisations can exert power and influence political systems in ways that little ones can not.

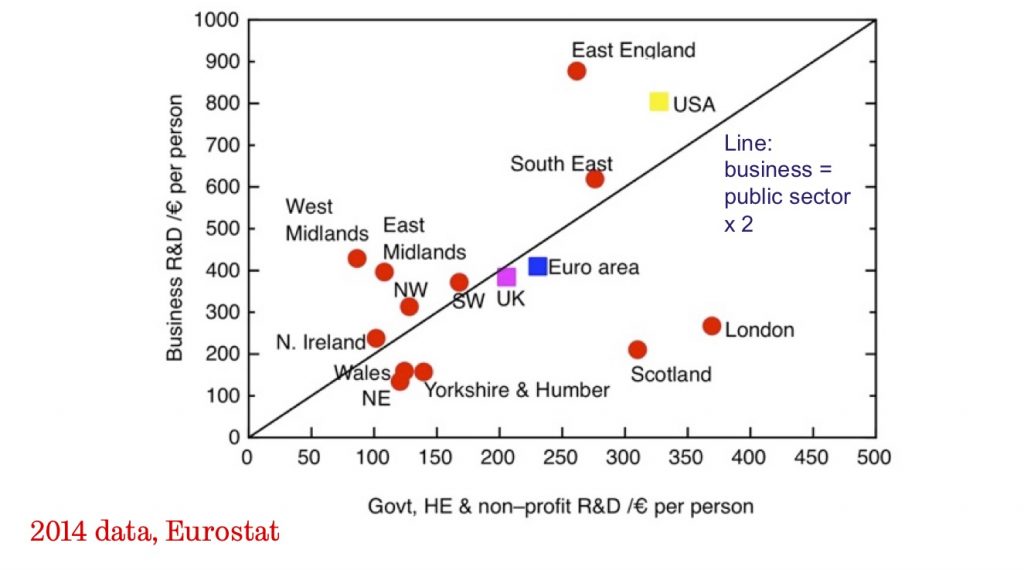

One type of friction that I think is particularly important relates to knowledge. The turn to market liberalism has been accompanied by a reification of intellectual property which I think is problematic. This is because the drive to consider chunks of protectable IP – patents – as tradable assets with an easily discoverable market value doesn’t really account for the synergies that Haskel and Westlake correctly identify as central to intangible assets. A single patent rarely has much value on its own – it gets its value as part of a bigger system of knowledge, some of it in the form of other patents, but much more of it as tacit knowledge held in individuals and networks.

The business of manufacturing itself is often the anchor for those knowledge networks. For an example of this, I’ve written elsewhere about the way in which the UK’s early academic lead in organic electronics didn’t translate into a business at scale, despite a strong IP position. The synergies with the many other aspects of the display industry, with its manufacturers and material suppliers already firmly located in the far east, were too powerful.

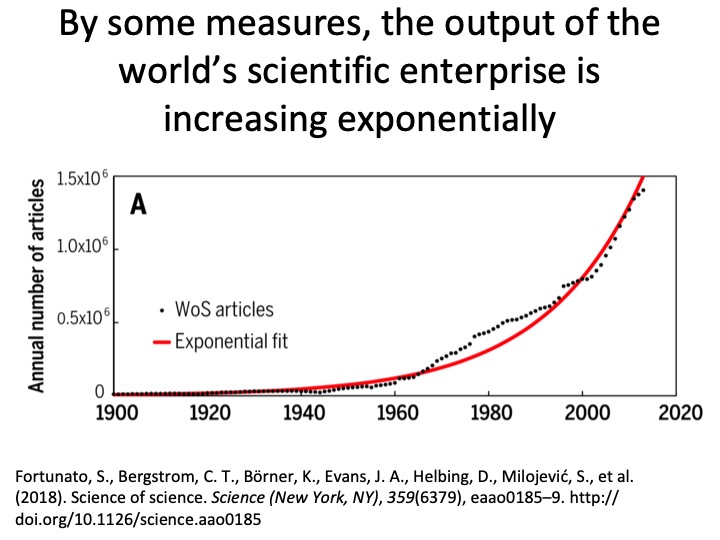

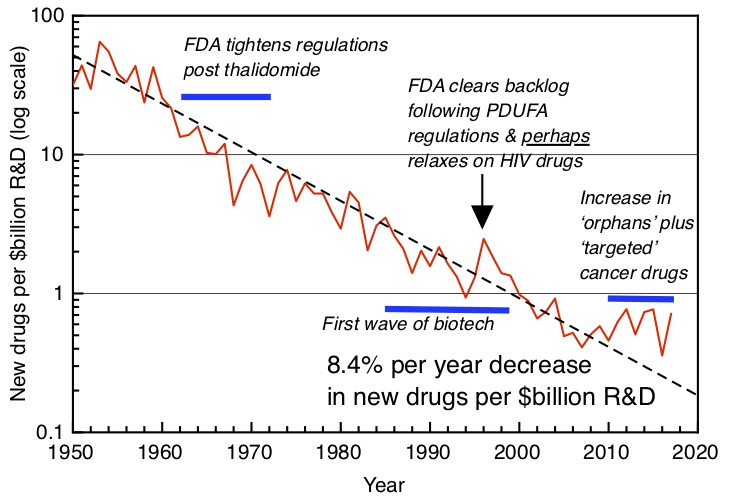

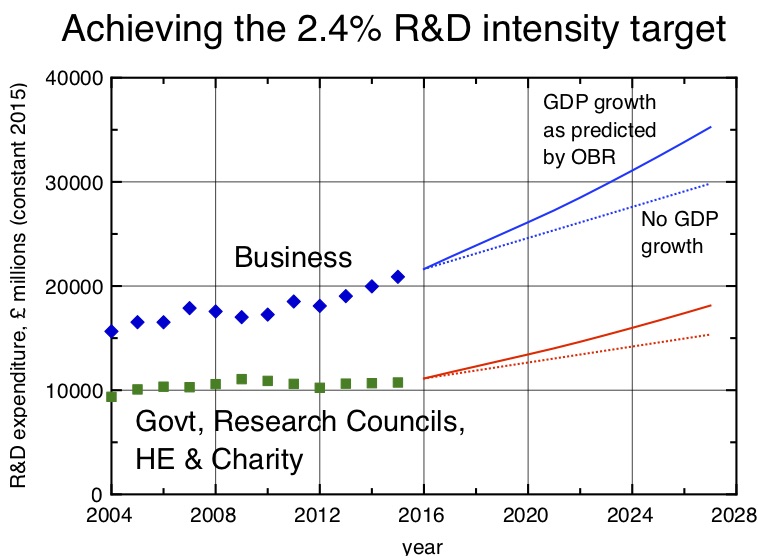

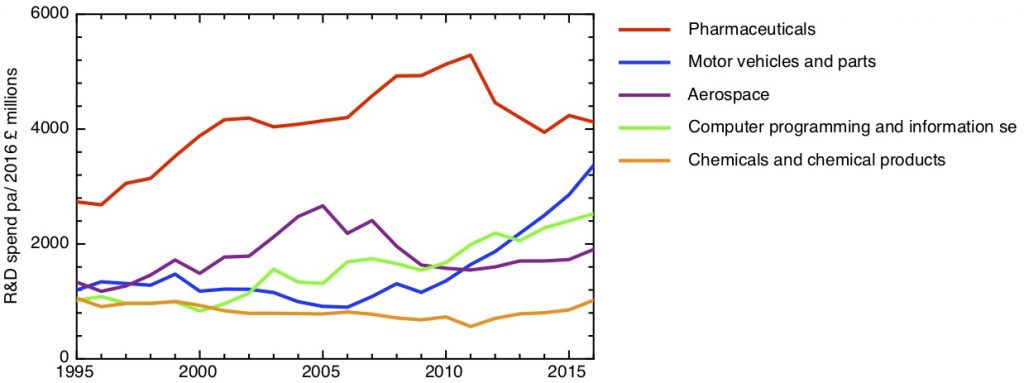

The unbundling strategy has its limits, and so too, perhaps, does the process of separating the intangible from the material. What is clear is that the way our economy currently deals with intangibles has led to wider problems, as Haskel and Westlake’s book makes clear. Intangible investments, for example into the R&D that underlies the new technologies that drive economic growth, do have special characteristics – spillovers and synergies – which lead our economies to underinvest in them, and that underinvestment must surely be a big driver of our current economic and political woes.

“Capitalism without Capital” really is as good as everyone is saying – it’s clear in its analysis, enormously helpful in clarifying assumptions and definitions that are often left unstated, and full of fascinating insights. It’s also rather a radical book, in an understated way. It’s difficult to read it without concluding that our current variety of capitalism isn’t working for us in the conditions we now find ourselves in, with growing inequality, stuttering innovation and stagnating economies. The remedies for this situation that the book proposes are difficult to disagree with; what I’m not sure about is whether they are far-reaching enough to make much difference.