In what way, and on what basis, should we attempt to steer the development of technology? This is the fundamental question that underlies at least two discussions that I keep coming back to here – how to do industrial policy and how to democratise science. But some would simply deny the premise of these discussions, and argue that technology can’t be steered, and that the market is the only effective way of incorporating public preferences into decisions about technology development. This is a hugely influential point of view which goes with the grain of the currently hegemonic neo-liberal, free market dominated world-view. It originates in the arguments of Friedrich Hayek against the 1940’s vogue for scientific planning, it incorporates Michael Polanyi’s vision of an “independent republic of science”, and it fits the view of technology as an autonomous agent which unfolds with a logic akin to that of Darwinian evolution – what one might called the “Wired” view of the world, eloquently expressed in Kevin Kelly’s recent book “What Technology Wants”. It’s a coherent, even seductive, package of beliefs; although I think it’s fatally flawed, it deserves serious examination.

Hayek’s argument against planning (his 1945 article The Use of Knowledge in Society makes this very clearly) rests on two insights. Firstly, he insists that the relevant knowledge that would underpin the rational planning of an economy or a society isn’t limited to scientific knowledge, and must include the tacit, unorganised knowledge of people who aren’t experts in the conventional sense of the word. This kind of knowledge, then, can’t rest solely with experts, but must be dispersed throughout society. Secondly, he claims that the most effective – perhaps the only – way in which this distributed knowledge can be aggregated and used is through the mechanism of the market. If we apply this kind of thinking to the development of technology, we’re led to the idea that technological development would happen in the most effective way if we simply allow many creative entrepreneurs to try different ways of combining different technologies and to develop new ones on the basis of existing scientific knowledge and what developments of that knowledge they are able to make. When the resulting innovations are presented to the market, the ones that survive will, by definition, the ones that best meet human needs. Stated this way, the connection with Darwinian evolution is obvious.

One objection to this viewpoint is essentially moral in character. The market certainly aggregates the preferences and knowledge of many people, but it necessarily gives more weight to the views of people with more money, and the distribution of money doesn’t necessarily coincide with the distribution of wisdom or virtue. Some free market enthusiasts simply assert the contrary, following Ayn Rand. There are, though, some much less risible moral arguments in favour of free markets which emphasise the positive virtues of pluralism, and even those opponents of libertarianism who point to the naivety of believing that this pluralism can be maintained in the face of highly concentrated economic and political power need to answer important questions about how pluralism can be maintained in any alternative system.

What should be less contentious than these moral arguments is an examination of the recent history of technological innovation. This shows that the technologies that made the modem world – in all their positive and negative aspects – are largely the result of the exercise of state power, rather than of the free enterprise of technological entrepreneurs. New technologies were largely driven by large scale interventions by the Warfare States that dominated the twentieth century. The military-industrial complexes of these states began long before Eisenhower popularised this name, and existed not just in the USA, but in Wilhelmine and Nazi Germany, in the USSR, and in the UK (David Edgerton’s “Warfare State: Britain 1920- 1970” gives a compelling reinterpretation of modern British history in these terms). At the beginning of the century, for example, the Haber-Bosch process for fixing nitrogen was rapidly industrialised by the German chemical company BASF. It’s difficult to think of a more world-changing innovation – more than half the world’s population wouldn’t now be here if it hadn’t been for the huge growth in agricultural productivity that artificial fertilisers made possible. However, the importance of this process for producing the raw materials for explosives ensured that the German state took much more than a spectator’s role. Vaclav Smil, in his book Enriching the Earth, quotes an estimate for the development cost of the Haber-Bosch process of US$100 million at 1919 prices (roughly US$1 billion in current money, equating to about $19 billion in terms of its share of the economy at the time), of which about half came from the government. Many more recent examples of state involvement in innovation are cited in Mariana Mazzucato’s pamphlet The Entrepreneurial State. Perhaps one of the most important stories is the role of state spending in creating the modern IT industry; computing, the semiconductor industry and the internet are all largely the outcome of US military spending.

Of course, the historical fact that the transformative, general purpose technologies that were so important in driving economic growth in the twentieth century emerged as a result of state sponsorship doesn’t by itself invalidate the Hayekian thesis that innovation is best left to the free market. To understand the limitations of this picture, we need to return to Hayek’s basic arguments. Under what circumstances does the free market fail to aggregate information in an optimal way? People are not always rational economic actors – they know what they want and need now, but they aren’t always good at anticipating what they might want if things they can’t imagine become available, or what they might need if conditions change rapidly. There’s a natural cognitive bias to give more weight to the present, and less to an unknowable future. Just like natural selection, the optimisation process that the market carries out is necessarily local, not global.

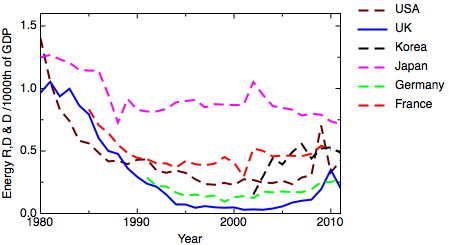

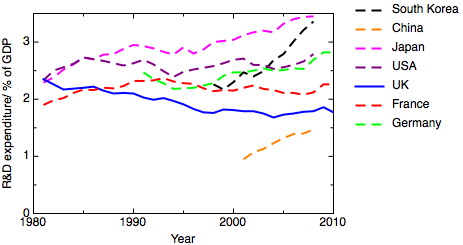

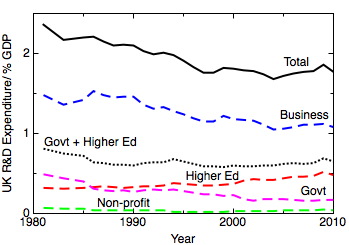

So when does the Hayekian argument for leaving innovation to the market not apply? The free market works well for evolutionary innovation – local optimisation is good at solving present problems with the tools at hand now. But it fails to be able to mobilise resources on a large scale for big problems whose solution will take more than a few years. So, we’d expect market-driven innovation to fail to deliver whenever timescales for development are too long, or the expense of development too great. Because capital markets are now short-term to the point of irrationality (as demonstrated by this study (PDF) from the Bank of England by Andrew Haldane), the private sector rejects long term investments in infrastructure and R&D, even if the net present value of those investments would be significantly positive. In the energy sector, for example, we saw widespread liberalisation of markets across the world in the 1990s. One predictable consequence of this has been a collapse of private sector R&D in the energy sector (illustrated for the case of the USA by Dan Kammen here – The Incredible Shrinking Energy R&D Budget (PDF)).

The contrast is clear if we compare two different cases of innovation – the development of new apps for the iPhone, and the development of innovative new passenger aircraft, like the composite-based Boeing Dreamliner and Airbus A350. The world of app development is one in which tens or hundreds of thousands of people can and do try out all sorts of ideas, a few of which have turned out to fulfil an important and widely appreciated need and have made their developers rich. This is a world that’s well described by the Hayekian picture of experimentation and evolution – the low barriers to entry and the ease of widespread distribution of the products rewards experimentation. Making a new airliner, in contrast, involves years of development and outlays of tens of billions of dollars in development cost before any products are sold. Unsurprisingly, the only players are two huge companies – essentially a world duopoly – each of whom is in receipt of substantial state aid of one form or another. The lesson is that technological innovation doesn’t just come in one form. Some innovation – with low barriers to entry, often building on existing technological platforms – can be done by individuals or small companies, and can be understood well in terms of the Hayekian picture. But innovation on a larger scale, the more radical innovation that leads to new general purpose technologies, needs either a large company with a protected income stream or outright state action. In the past the companies able to carry out innovation on this scale would typically have been a state sponsored “national champion”, supported perhaps by guaranteed defense contracts, or the beneficiary of a monopoly or cartel, such as the postwar Bell Labs.

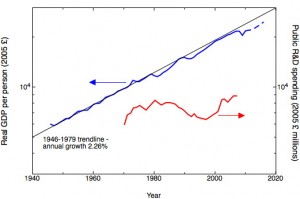

If the prevalence of this Hayekian thinking about technological innovation really does mean that we’re less able now to introduce major, world-changing innovations than we were 50 years ago, this would matter a great deal. One way of thinking about this is in evolutionary terms – if technological innovation is only able to proceed incrementally, there’s a risk that we’re less able to adapt to sudden shocks, we’re less able to anticipate the future and we’re at risk of being locked into technological trajectories that we can’t alter later in response to unexpected changes in our environment or unanticipated consequences. I’ve written earlier about the suggestion that, far from seeing universal accelerating change, we’re currently seeing innovation stagnation. The risk is that we’re seeing less in the way of really radical innovation now, at a time when pressing issues like climate change, peak cheap oil and demographic transitions make innovation more necessary than ever. We are seeing a great deal of very rapid innovation in the world of information, but this rapid pace of change in one particular realm has obscured much less rapid growth in the material realm and the biological realm. It’s in these realms that slow timescales and the large scale of the effort needed mean that the market seems unable to deliver the innovation we need.

It’s not going to be possible, nor would it be desirable, for us to return to the political economies of the mid-twentieth century warfare states that delivered the new technologies that underlie our current economies. Whatever other benefits the turn to free markets may have delivered, it seems to have been less effective at providing radical innovation, and with the need for those radical innovations becoming more urgent, some rethinking is now urgently required.