The following is the introduction to a working paper I wrote while recovering from surgery a couple of months ago. This brings together much of what I’ve been writing over the last year or two about productivity, science and innovation policy and the need to rebalance the UK’s innovation system to increase R&D capacity outside London and the South East. It discusses how we should direct R&D efforts to support big societal goals, notably the need to decarbonise our energy supply and refocus health related research to make sure our health and social care system is humane and sustainable. The full (53 page) paper can be downloaded here.

We should rebuild the innovation systems of those parts of the country outside the prosperous South East of England. Public investments in new translational research facilities will attract private sector investment, bring together wider clusters of public and business research and development, institutions for skills development, and networks of expertise, boosting innovation and leading to productivity growth. In each region, investment should be focused on industrial sectors that build on existing strengths, while exploiting opportunities offered by new technology. New capacity should be built in areas like health and social care, and the transition to low carbon energy, where the state can use its power to create new markets to drive the innovation needed to meet its strategic goals.

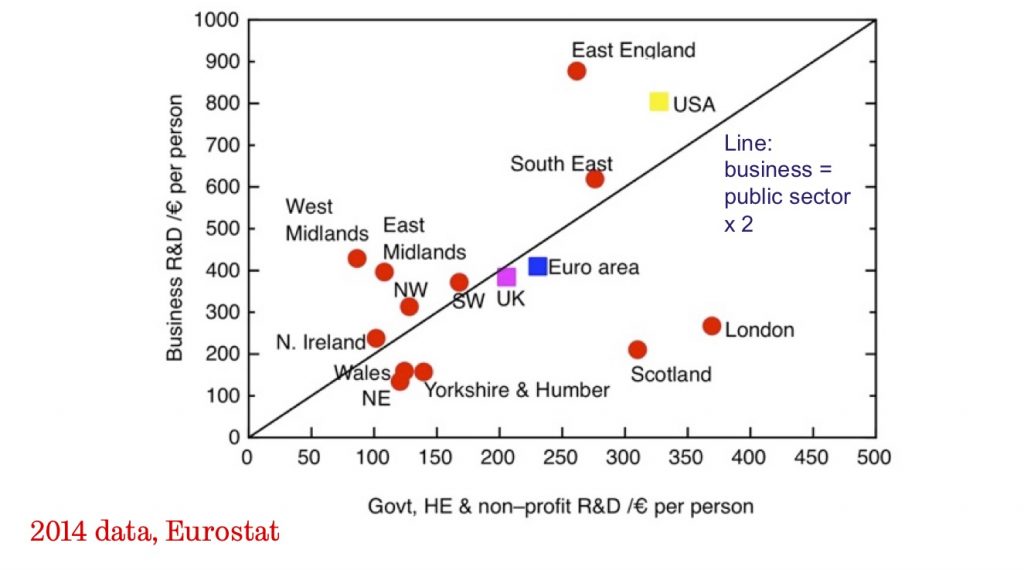

This would address two of the UK’s biggest structural problems: its profound disparities in regional economic performance, and a research and development intensity – especially in the private sector and for translational research – that is low compared to competitors. By focusing on ‘catch-up’ economic growth in the less prosperous parts of the country, this plan offers the most realistic route to generating a material change in the total level of economic growth. At the same time, it should make a major contribution to reducing the political and social tensions that have become so obvious in recent years.

The global financial crisis brought about a once-in-a-lifetime discontinuity in the rate of growth of economic quantities such as GDP per capita, labour productivity and average incomes; their subsequent decade-long stagnation signals that this event was not just a blip, but a transition to a new, deeply unsatisfactory, normal. A continuation of the current policy direction will not suffice; change is needed.

Our post-crisis stagnation has more than one cause. Some sources of pre-crisis prosperity have declined, and will not – and should not – come back. North Sea oil and gas production peaked around the turn of the century. Financial services provided a motor for the economy in the run-up to the global financial crisis, but this proved unsustainable.

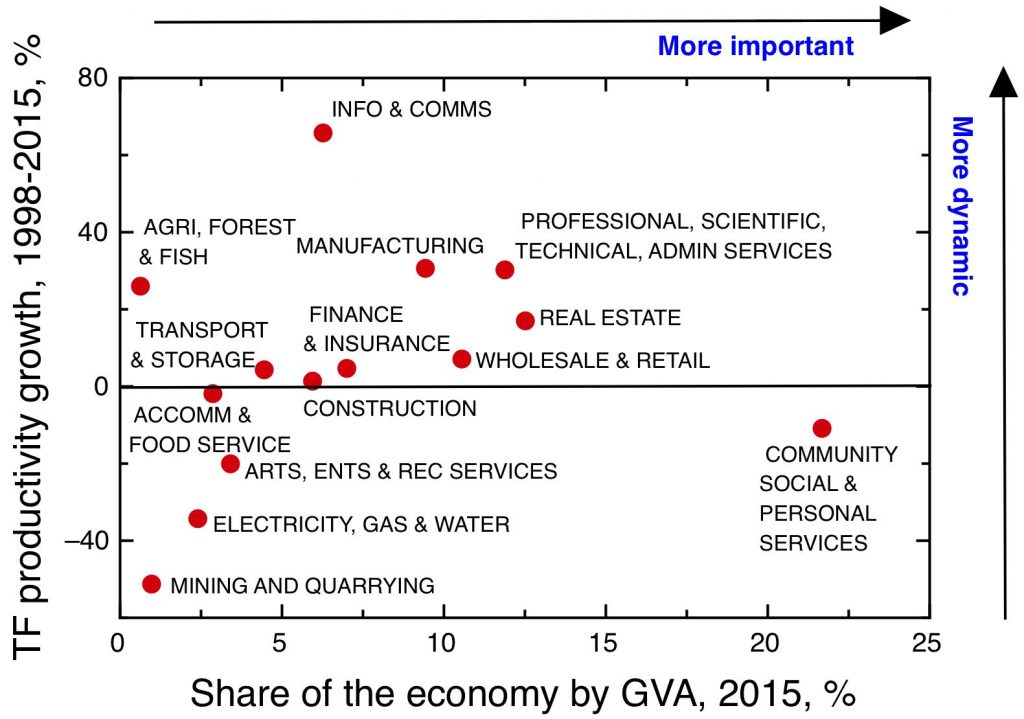

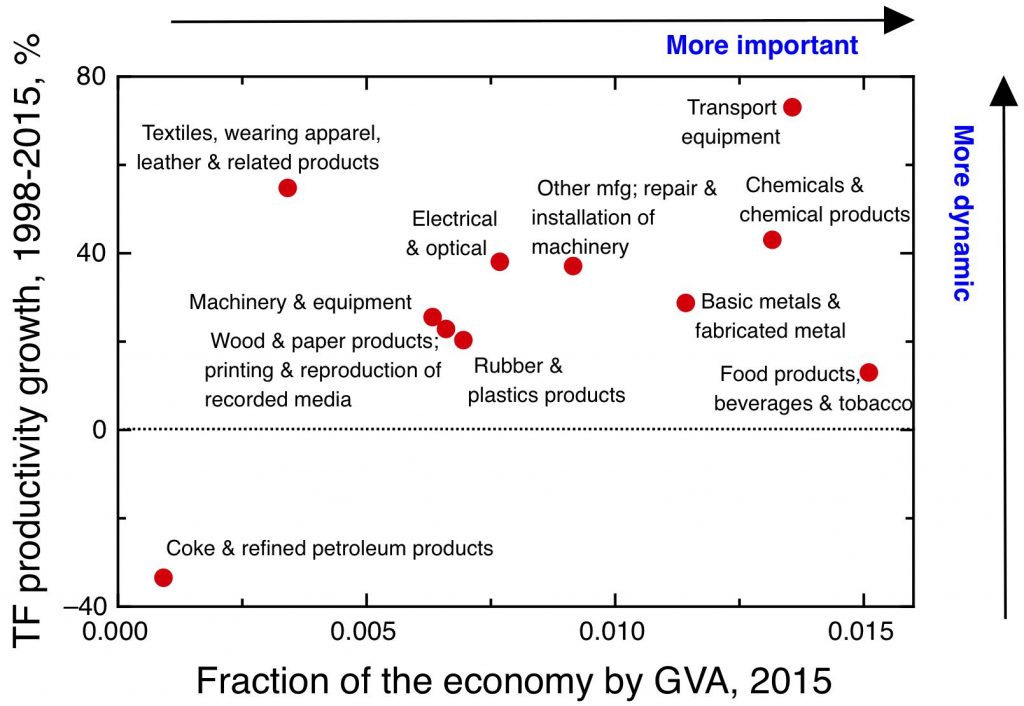

Beyond the unavoidable headwinds imposed by the end of North Sea oil and the financial services bubble, the wider economy has disappointed too. There has been a general collapse in total factor productivity growth – the economy is less able to create higher value products and services from the same inputs than in previous decades. This is a problem of declining innovation in its broadest sense.

There are some industry-specific issues. The pharmaceutical industry, for example, has been the UK’s leading science-led industry, and a major driver of productivity growth before 2007; this has been suffering from a world-wide malaise, in which lucrative new drugs seem harder and harder to find.

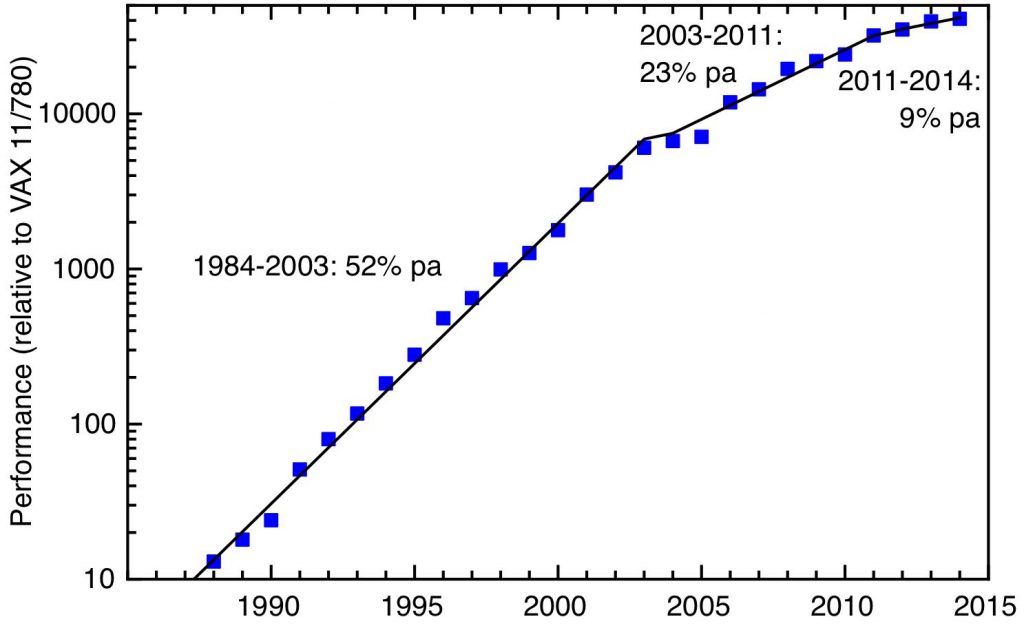

Yet many areas of innovation are flourishing, presenting opportunities to create new, high value products and services. It’s easy to get excited about developments in machine learning, the ‘internet of things’ and ‘Industrie 4.0’, in biotechnology, synthetic biology and nanotechnology, in new technologies for generating and storing energy.

But the productivity data shows that UK companies are not taking enough advantage of these opportunities. The UK economy is not able to harness innovation at a sufficient scale to generate the economic growth we need.

Up to now, the UK’s innovation policy had been focused on academic science. We rightly congratulate ourselves on the strength of our science base, as measured by the Nobel prizes won by UK-based scientists and the impact of their publications.

Despite these successes, the UK’s wider research and development base suffers from three faults:

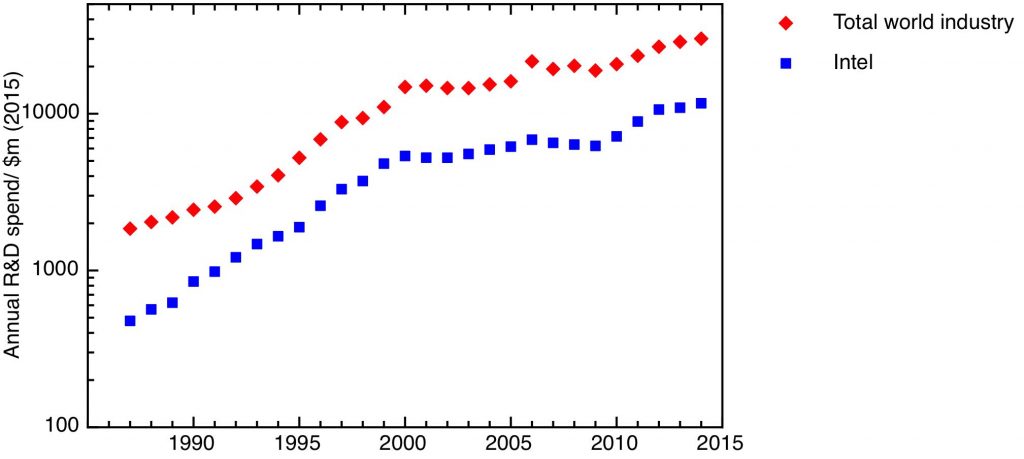

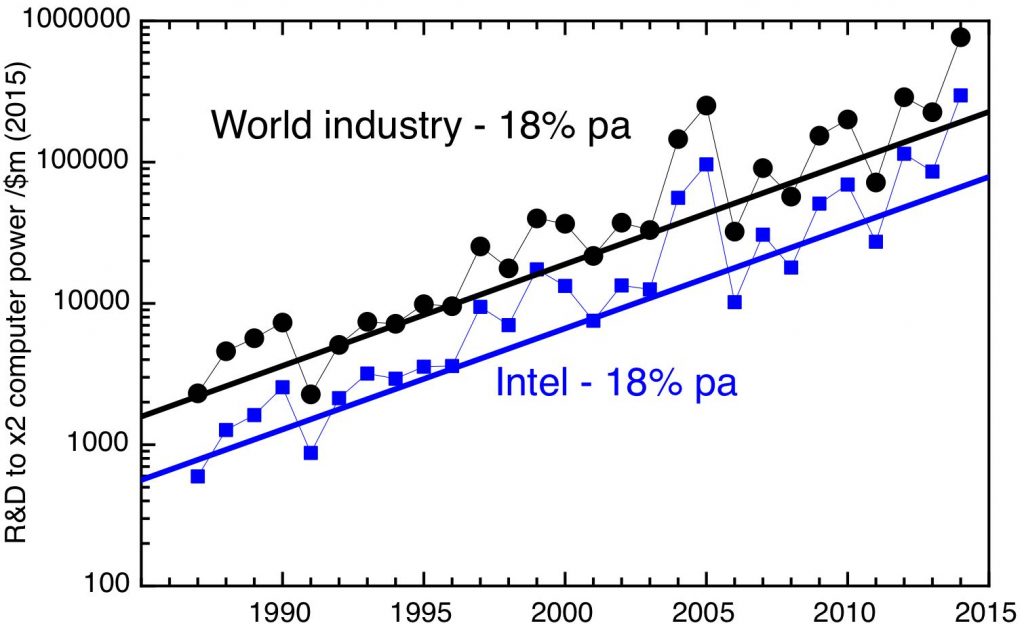

• It is too small for the size of our economy, as measured by R&D intensity,

• It is particularly weak in translational research and industrial R&D,

• It is too geographically concentrated in the already prosperous parts of the country.

Science policy has been based on a model of correcting market failure, with an overwhelming emphasis on the supply side – ensuring strong basic science and a supply of skilled people. We need to move from this ‘supply side’ science policy to an innovation policy that explicitly creates demand for innovation, in order to meet society’s big strategic goals.

Historically, the main driver for state investment in innovation has been defence. Today, the largest fraction of government research and development supports healthcare – yet this is not done in a way that most effectively promotes either the health of our citizens or the productivity of our health and social care system.

Most pressingly, we need innovation to create affordable low carbon energy. Progress towards decarbonising our energy system is not happening fast enough, and innovation is needed to decrease the price of low carbon energy and increase its scale, and increase energy efficiency.

More attention needs to be paid to the wider determinants of innovation – organisation, management quality, skills, and the diffusion of innovation as much as discovery itself. We need to focus more on the formal and informal networks that drive innovation – and in particular on the geographical aspects of these networks. They work well in Cambridge – why aren’t they working in the North East or in Wales?

We do have examples of new institutions that have catalysed the rebuilding of innovation systems in economically lagging parts of the country. Translational research institutions such as Coventry’s Warwick Manufacturing Group, and Sheffield’s Advanced Manufacturing Research Centre, bring together university researchers and workers from companies large and small, help develop appropriate skills at all levels, and act as a focus for inward investment.

These translational research centres offer models for new interventions that will raise productivity levels in many sectors – not just in traditional ‘high technology’ sectors, but also in areas of the foundational economy such as social care. They will drive the innovation needed to create an affordable, humane and effective healthcare system. We must also urgently reverse decades of neglect by the UK of research into new sustainable energy systems, to hasten the overdue transition to a low carbon economy. Developing such centres, at scale, will do much to drive economic growth in all parts of the country.

Continue to read the full (53 page) paper here (PDF).