Today saw the official opening of the Nottingham nanotechnology and nanoscience centre, which brings together some existing strong research areas across the University. I’ve made the short journey down the motorway from Sheffield to listen to a very high quality program of talks, with Sir Harry Kroto, co-discoverer of buckminster fullerene, taking the top of the bill. Also speaking were Don Eigler, from IBM (the originator of perhaps the most iconic image in all nanotechnology, the IBM logo made from individual atoms) Colin Humphreys, from the University of Cambridge, and Sir Fraser Stoddart, from UCLA.

There were some common themes in the first two talks (common, also, with Wade Adams’s talk in Norway described below). Both talked about the great problems of the world, and looked to nanotechnology to solve them. For Colin Humphries, the solutions to problems of sustainable energy and clean water are to be found in the material gallium nitride, or precisely in the compounds of aluminium, indium and gallium nitride which allow one to make, not just blue light emitting diodes, but LEDs that can emit light of any wavelength between the infra-red and the deep ultra-violet. Gallium nitride based blue LEDs were invented as recently as 1996 by Shuji Nakamura, but this is already a $4 billion market, and everyone will be familiar with torches and bicycle lights using them.

How can this help the problem of access to clean drinking water? We should remind ourselves that 10% of world child mortality is directly related to poor water quality, and half the hospital beds in the world occupied by people with water related diseases. One solution would be to use deep ultraviolet to sterilise contaminated water. Deep UV works well for sterilisation because biological organisms never developed a tolerance to these waves, which don’t penetrate the atmosphere. UV at a wavelength of 270 nm does the job well, but existing lamps are not practical because they need high voltages and are not efficient, and also some use mercury. AlGaN LEDS work well, and in principle they could be powered by solar cells at 4 V, which might allow every household to sterilise its water supply easily and cheaply. The problem is efficiency is too low for flowing water. At blue wavelengths (400 nm) efficiency is very good at 70%, but it drops precipitously at smaller wavelengths, and this is not yet understood theoretically.

The contribution of solid state lighting to the energy crisis arises from the efficiency of LEDs compared to tungsten light bulbs. People often underestimate the amount of energy used in lighting domestic and commercial buildings. Globally, it accounts for 1,900 megatonnes of CO2; this is 70% of the total emissions from cars, and three times the amount due to aviation. In the UK, it amounts to 20% of electricity generated, and in Thailand, for example, it is even more, at 40%. But tungsten light bulbs, which account for 79% of sales, have an efficiency of only 5%. There is much talk now of banning tungsten light bulbs, but the replacement, fluorescent lights, is not perfect either. Compact fluorescents have an efficiency of 15%, which is an improvement, but what is less well appreciated is that each bulb contains 4 mg of mercury. This would lead to tonnes of mercury ending up in landfills if tungsten bulbs were replaced by compact fluorescents.

Could solid-state lighting do the job? Currently what you can buy are blue LEDs (made from InGaN) which excite a yellow phosphor. The colour balance of these leaves something to be desired, and soon we will see blue or UV LEDs exciting red/green/blue phosphors which will have a much better colour balance (you could also use a combination of red, green and blue LEDs, but currently green efficiencies are too low). The best efficiency in a commercial white LED is 30% (from Seoul Semiconductor), but the best in the lab (Nichia) is currently 50%. The target is an efficiency of 50-80% at high drive currents, which puts them at a higher efficiency than the current most efficient light, sodium lamps, whose familiar orange glow converts electricity at 45% efficiency. This target would make them 10 times more efficient than filaments, 3 times more efficient than compact fluorescents and with no mercury. In the US the 50% replacement of filaments would save 41 GW, in the UK 100% replacement would save 8 GW of power station capacity. The problem at the moment is cost, but the rapidity of progress in this area means that Humphries is confident that within a few years costs will fall dramatically.

Don Eigler also talked about societal challenges, but with a somewhat different emphasis. His talk was entitled “Nanotechnology: the challenge of a new frontier”. The questions he asked were “What challenges do we face as a society in dealing with this new frontier of nanotechnology, and wow should we as a society make decisions about a new technology like nanotechnology?”

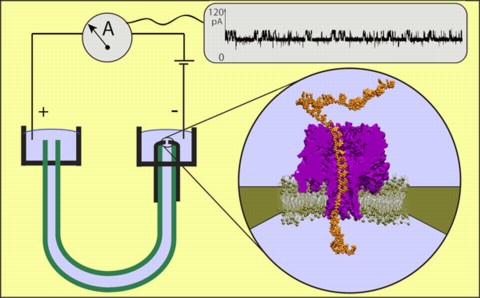

There are three types of nanotechnology, he said: evolutionary nanotechnology (historically larger technologies that have been shrunk to nanoscale dimensions), revolutionary nanotechnology (entirely new nanometer-scale technologies) and natural nanotechnology (cell biology, offering inspirations for our own technologies). Evolutionary nanotechnologies include semiconductors, nanoparticles in cosmetics. Revolutionary nanotechnologies include carbon nanotubes, for potential new logic structures that might supplant silicon, and the IBM millipede data storage system. Natural nanotechnologies include bacterial flagellar motors.

Nanohysteria comes into different varieties too. Type 1 nanohysteria is represented by greed driven “irrational exuberance”, and is based on the idea that nanotechnology will change everything very soon, as touted by investment tipsters and consultants who want to take people’s money off them. What’s wrong with this is the absence of critical thought. Type 2 nanohysteria is the opposite – fear driven irrational paranoia exemplified by the grey goo scenario of out of control self-replicating molecular assemblers or nanobots. What’s wrong with this is again, the absence of critical thought. Prediction is difficult, but Eigler thinks that self-replicating nanobots are not going to happen any time soon, if ever.

What else do people fear about nanotechnology? Eigler recently met a young person with strong views, that nanotech is scary, it will harm the biosphere, it will create new weapons, it is being driven by greedy individuals and corporations, in summary it is not just wrong, it is evil. Where did these ideas come from? If you look on the web – you see talk of superweapons made from molecular assemblers. What you don’t find on the web are statements like “My grandmother is still alive today because nanotechnology saved her life”. Why is this? Nanotechnology has not yet provided a tangible benefit to grandmothers!

Some candidates include gold nanoshell cancer therapy, as developed by Naomi Halas at Rice. This particular therapy may not work in humans, but something similar will. Another example is the work of Sam Stupp at Northwestern, making nanofibers that cause neural progenitor cells turn into new neurons, not scar tissue, holding out the hope of regenerative medicine to repair spinal cord damage.

As an example of wrong conclusions, Eigler made the smallest logic circuit, 12nm by 17 nm, made from carbon monoxide. But carbon monoxide is a deadly poison – shouldn’t we worry about this? Let’s do the sum – 18 CO molecules are needed for one transistor. The context is that I breathe 2 billion trillion molecules a day, so every day I breathe enough to make 160 million computers.

What could the green side of nanotechnology be? We could have better materials, that are lighter, stronger and more easily recyclable, and this will reduce energy consumption. Perhaps we can use nanotechnology to reduce consumption of natural resources and helping recycling. We can’t prove yet that these good benefits will follow, but Eigler believes they are likely.

There is a real risk of nanotechnology, if it is used without evaluating the consequences. The widespread introduction of nanoparticulates into the environment would be an example of this. So how do we now if something is safe? We need to think it through, but we can’t guarantee absolutely that anything can be absolutely safe. The principles should be that we eliminate fantasies, understand the different motivations that people have, and honestly assess risk and benefit. We need informed discussion, that is critical, creative, inclusive and respectful. We need to speak with knowledge and respect, and listen with zeal. Scientists have not always been good at this and we need to get much better. Our best weapons are our traditions of rigorous honesty and our tolerance for diverse beliefs.